Easy Serverless RAG with Knowledge Base for Amazon Bedrock

Discover enhancing FMs with contextual data for Retrieval Augmented Generation (RAG) to provide more relevant, accurate, and customized responses.

Published Feb 7, 2024

Last Modified Feb 12, 2024

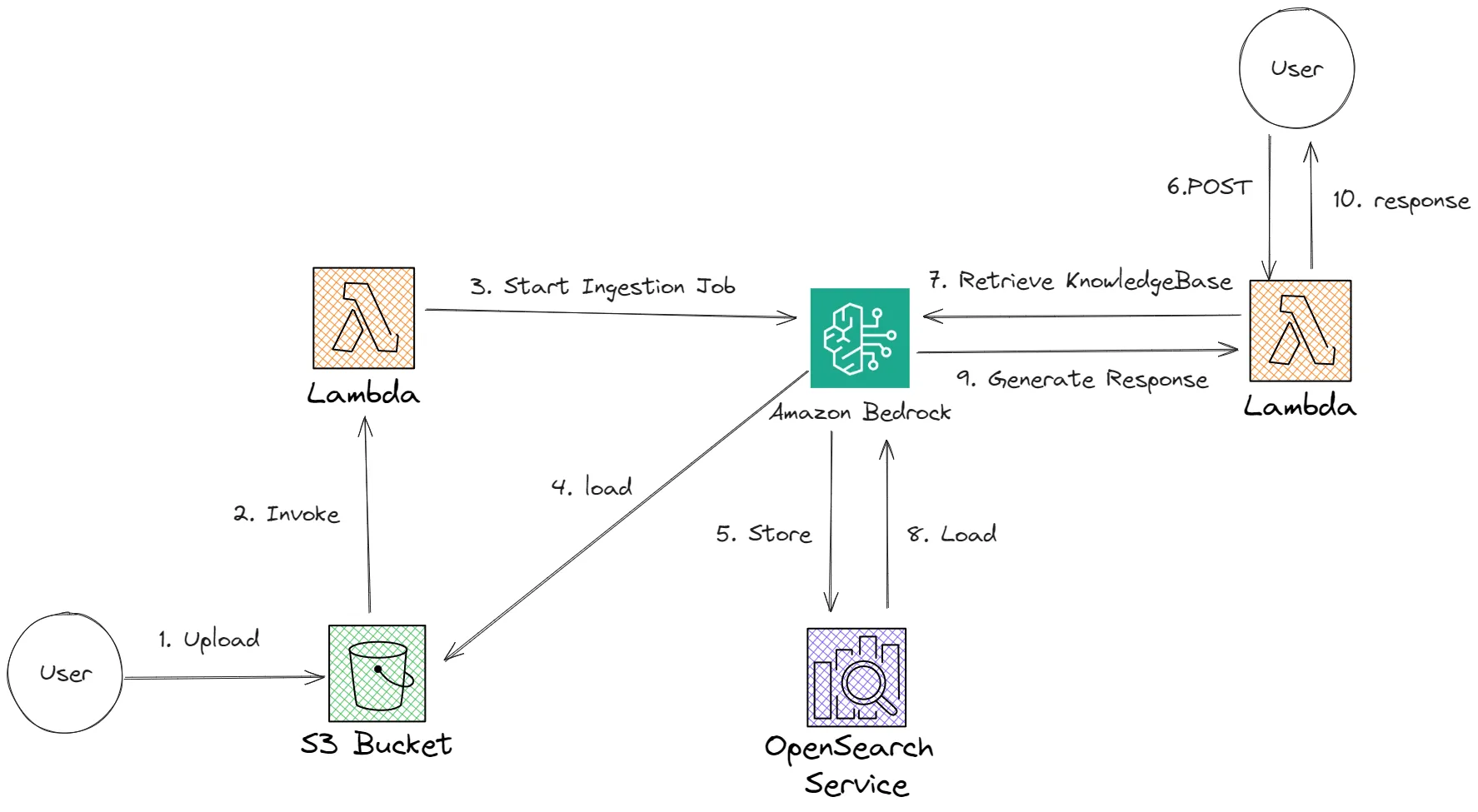

In this article, we will learn and experiment with Amazon Bedrock Knowledge Base and AWS Generative AI CDK Constructs to build fully managed serverless RAG solutions to securely connect foundation models (FMs) in Amazon Bedrock to our custom data for Retrieval Augmented Generation (RAG).

We will build a simple Resume AI that can respond with more relevant, context-specific, and accurate responses according to the resume data we provided.

We will use several AWS Services like Amazon Bedrock, AWS Lambda, and AWS CDK.

- Knowledge Bases for Amazon Bedrock provides a fully managed RAG experience and the easiest way to get started with RAG in Amazon Bedrock.

- AWS CDK to define our cloud infrastructure in AWS.

- AWS Lambda is our serverless function to generate responses from Amazon Bedrock to the user.

Retrieval-augmented generation (RAG) is a technique in artificial intelligence that combines information retrieval with text generation. It's used to improve the quality of large language models (LLMs) by allowing them to access and process information from external knowledge bases. This can make LLMs more accurate, up-to-date, and relevant to specific domains or tasks.

By combining information retrieval with text generation, RAG can:

- Improve the accuracy and factual consistency of LLM responses. LLMs are trained on massive amounts of text data, but this data may not always be accurate or up-to-date. By accessing external knowledge bases, RAG can ensure that LLMs are using the most current and reliable information to generate their responses.

- Make LLMs more relevant to specific domains or tasks. By focusing on retrieving information from specific knowledge bases, RAG can tailor LLMs to specific domains or tasks. This can be useful for applications such as question answering, summarization, and translation.

- Provide transparency and explainability. RAG can provide users with the sources of the information that was used to generate a response. This can help users to understand how the LLM arrived at its answer and to assess its credibility.

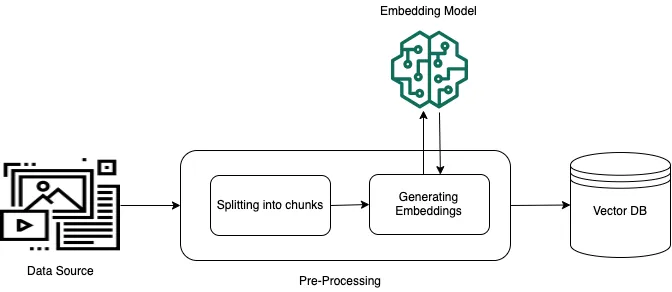

Pre-processing data

To enable effective retrieval from private data, a common practice is to first split the documents into manageable chunks for efficient retrieval. The chunks are then converted to embeddings and written to a vector index, while maintaining a mapping to the original document. These embeddings are used to determine semantic similarity between queries and text from the data sources. The following image illustrates pre-processing of data for the vector database.

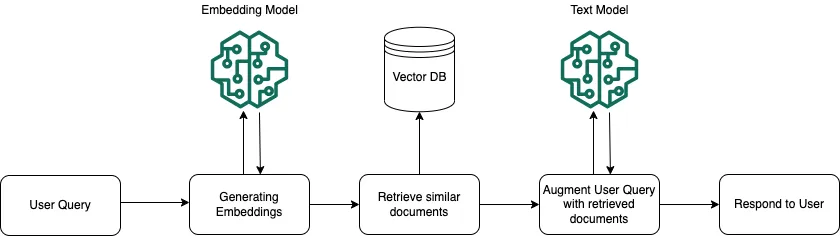

At runtime, an embedding model is used to convert the user's query to a vector. The vector index is then queried to find chunks that are semantically similar to the user's query by comparing document vectors to the user query vector. In the final step, the user prompt is augmented with the additional context from the chunks that are retrieved from the vector index. The prompt alongside the additional context is then sent to the model to generate a response for the user. The following image illustrates how RAG operates at runtime to augment responses to user queries.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

Since Amazon Bedrock is serverless, you don't have to manage any infrastructure, and you can securely integrate and deploy generative AI capabilities into our applications using the AWS services you are already familiar with.

With a knowledge base, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data for Retrieval Augmented Generation (RAG). Access to additional data helps the model generate more relevant, context-specific, and accurate responses without continuously retraining the FM.

Knowledge Bases gives you a fully managed RAG experience and the easiest way to get started with RAG in Amazon Bedrock.

Knowledge Bases for Amazon Bedrock manages the end-to-end RAG workflow for you. You specify the location of your data, select an embedding model to convert the data into vector embeddings, and have Amazon Bedrock create a vector store in your account to store the vector data. When you select this option (available only in the console), Amazon Bedrock creates a vector index in Amazon OpenSearch Serverless in your account, removing the need to manage anything yourself.

AWS Cloud Development Kit (CDK) is an open-source software development framework to define cloud infrastructure in code and provision it through AWS CloudFormation. The AWS CDK allows developers to model infrastructure using familiar programming languages, such as TypeScript, Python, Java, C#, and others, rather than using traditional YAML or JSON templates.

- We will start creating our project folder by running following command in our terminal.

1

mkdir resume-ai && cd resume-ai- Then initialize our CDK project.

1

cdk init app --language javascript- First, prepare our resume data in a text or PDF file and save it as

data/resume.txtwith example content like this.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

Personal Information:

Name: SpongeBob SquarePants

Address: 124 Conch Street, Bikini Bottom

Phone: 555-555-5555

Email: spongebob@krustykrab.com

Objective:

Enthusiastic and hardworking sea sponge seeking a challenging and rewarding position. Eager to apply my skills and positive attitude to contribute to a dynamic work environment.

Education:

- Jellyfishing School

Graduated with Honors

Certifications:

- AWS Cloud Practitioner Foundational

- AWS Developer Associate

- AWS Machine Learning Speciality

Work Experience:

1. Krusty Krab - Bikini Bottom, Pacific Ocean

Job Title: Fry Cook

Dates: 1999 - Present

Key responsibilities:

- Mastering the art of Krabby Patty flipping.

- Ensuring a clean and organized kitchen.

- Providing excellent customer service with a smile.

2. Jellyfish Fields - Bikini Bottom, Pacific Ocean

Job Title: Jellyfishing Enthusiast

Dates: Summers of 1999 - Present

Responsibilities:

- Expert in jellyfishing techniques.

- Successfully captured and released jellyfish.

- Shared jellyfishing knowledge with fellow enthusiasts.

Skills:

- Cooking: Proficient in preparing Krabby Patties and other Bikini Bottom delicacies.

- Optimism: Known for my positive attitude and ability to find joy in any situation.

- Teamwork: Collaborative team player, skilled at working with diverse personalities.

- Adaptability: Thrives in fast-paced and unpredictable environments.

- Communication: Excellent communication skills, both with colleagues and customers.

Certifications:

- Certified Krabby Patty Expert - Krusty Krab Training Program

- Jellyfishing License - Jellyfishing School

Hobbies:

- Jellyfishing

- Karate

- Playing the ukulele

References:

- Mr. Eugene Krabs - Owner, Krusty Krab

Contact: 555-1234, krabs@krustykrab.com

- Squidward Tentacles - Co-worker, Krusty Krab

Contact: 555-5678, squidward@krustykrab.com- We will create a couple of lambda functions in

srcfolder.

1

mkdir src- Create our first lambda function in

src/queryKnowledgeBase

1

mkdir src/queryKnowledgeBase && touch src/queryKnowledgeBase/index.js- This function will handle user request queries and return the RAG response from Bedrock model.

- Type the following function code inside

src/queryKnowledgeBase/index.js

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

const {

BedrockAgentRuntimeClient,

RetrieveAndGenerateCommand,

} = require("@aws-sdk/client-bedrock-agent-runtime");

const client = new BedrockAgentRuntimeClient({

region: process.env.AWS_REGION,

});

exports.handler = async (event, context) => {

const { question } = JSON.parse(event.body);

const input = {

// RetrieveAndGenerateRequest

input: {

// RetrieveAndGenerateInput

text: question, // required

},

retrieveAndGenerateConfiguration: {

// RetrieveAndGenerateConfiguration

type: "KNOWLEDGE_BASE", // required

knowledgeBaseConfiguration: {

// KnowledgeBaseRetrieveAndGenerateConfiguration

knowledgeBaseId: process.env.KNOWLEDGE_BASE_ID, // required

modelArn: `arn:aws:bedrock:${process.env.AWS_REGION}::foundation-model/anthropic.claude-v2`, // required

},

},

};

const command = new RetrieveAndGenerateCommand(input);

const response = await client.send(command);

return JSON.stringify({

response: response.output.text,

});

};

- Then create our second lambda function in

src/IngestJob

1

mkdir src/IngestJob && touch src/IngestJob/index.js- This function will run Ingest Job in Bedrock Knowledge Base to do pre-processing and will get triggered when we upload our data to S3 Bucket.

- Type the following function code inside

src/IngestJob/index.js.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

const {

BedrockAgentClient,

StartIngestionJobCommand,

} = require("@aws-sdk/client-bedrock-agent"); // CommonJS import

const client = new BedrockAgentClient({ region: process.env.AWS_REGION });

exports.handler = async (event, context) => {

const input = {

// StartIngestionJobRequest

knowledgeBaseId: process.env.KNOWLEDGE_BASE_ID, // required

dataSourceId: process.env.DATA_SOURCE_ID, // required

clientToken: context.awsRequestId, // required

};

const command = new StartIngestionJobCommand(input);

const response = await client.send(command);

console.log(response);

return JSON.stringify({

ingestionJob: response.ingestionJob,

});

};

- Then to start building our service with CDK, go to

lib/resume-ai-stack.jsfile and type the following code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

const { Stack, Duration, CfnOutput, RemovalPolicy } = require("aws-cdk-lib");

const s3 = require("aws-cdk-lib/aws-s3");

const lambda = require("aws-cdk-lib/aws-lambda");

const { bedrock } = require("@cdklabs/generative-ai-cdk-constructs");

const { S3EventSource } = require("aws-cdk-lib/aws-lambda-event-sources");

const iam = require("aws-cdk-lib/aws-iam");

class ResumeAiStack extends Stack {

/**

*

* @param {Construct} scope

* @param {string} id

* @param {StackProps=} props

*/

constructor(scope, id, props) {

super(scope, id, props);

const resumeBucket = new s3.Bucket(this, "resumeBucket", {

removalPolicy: RemovalPolicy.DESTROY,

autoDeleteObjects: true,

});

const resumeKnowledgeBase = new bedrock.KnowledgeBase(

this,

"resumeKnowledgeBase",

{

embeddingsModel: bedrock.BedrockFoundationModel.TITAN_EMBED_TEXT_V1,

}

);

const resumeDataSource = new bedrock.S3DataSource(

this,

"resumeDataSource",

{

bucket: resumeBucket,

knowledgeBase: resumeKnowledgeBase,

dataSourceName: "resume",

chunkingStrategy: bedrock.ChunkingStrategy.FIXED_SIZE,

maxTokens: 500,

overlapPercentage: 20,

}

);

const s3PutEventSource = new S3EventSource(resumeBucket, {

events: [s3.EventType.OBJECT_CREATED_PUT],

});

const lambdaIngestionJob = new lambda.Function(this, "IngestionJob", {

runtime: lambda.Runtime.NODEJS_20_X,

handler: "index.handler",

code: lambda.Code.fromAsset("./src/IngestJob"),

timeout: Duration.minutes(5),

environment: {

KNOWLEDGE_BASE_ID: resumeKnowledgeBase.knowledgeBaseId,

DATA_SOURCE_ID: resumeDataSource.dataSourceId,

},

});

lambdaIngestionJob.addEventSource(s3PutEventSource);

lambdaIngestionJob.addToRolePolicy(

new iam.PolicyStatement({

actions: ["bedrock:StartIngestionJob"],

resources: [resumeKnowledgeBase.knowledgeBaseArn],

})

);

const lambdaQuery = new lambda.Function(this, "Query", {

runtime: lambda.Runtime.NODEJS_20_X,

handler: "index.handler",

code: lambda.Code.fromAsset("./src/queryKnowledgeBase"),

timeout: Duration.minutes(5),

environment: {

KNOWLEDGE_BASE_ID: resumeKnowledgeBase.knowledgeBaseId,

},

});

const fnUrl = lambdaQuery.addFunctionUrl({

authType: lambda.FunctionUrlAuthType.NONE,

invokeMode: lambda.InvokeMode.BUFFERED,

cors: {

allowedOrigins: ["*"],

allowedMethods: [lambda.HttpMethod.POST],

},

});

lambdaQuery.addToRolePolicy(

new iam.PolicyStatement({

actions: [

"bedrock:RetrieveAndGenerate",

"bedrock:Retrieve",

"bedrock:InvokeModel",

],

resources: ["*"],

})

);

new CfnOutput(this, "KnowledgeBaseId", {

value: resumeKnowledgeBase.knowledgeBaseId,

});

new CfnOutput(this, "QueryFunctionUrl", {

value: fnUrl.url,

});

new CfnOutput(this, "ResumeBucketName", {

value: resumeBucket.bucketName,

});

}

}

module.exports = { ResumeAiStack };

- Then we can start deploying our services with CDK.

1

cdk deploy- After deployment is complete, CDK will return several output values including

KnowledgeBaseId,QueryFunctionUrlandResumeBucketName.

1

2

3

4

5

6

✨ Deployment time: 548.72s

Outputs:

KnowledgeBaseCdkStack.KnowledgeBaseId = 6U5AOPLPCN

KnowledgeBaseCdkStack.QueryFunctionUrl = https://gm7poxeeiqp3pc652e5repig640wuivw.lambda-url.us-east-1.on.aws/

KnowledgeBaseCdkStack.ResumeBucketName = knowledgebasecdkstack-resumebucket4dbbd0e5-qcstjyegctqe- Based on the output above, we will sync all files in our

datafolder to S3 bucket nameknowledgebasecdkstack-resumebucket4dbbd0e5-qcstjyegctqe.

1

aws s3 sync data s3://knowledgebasecdkstack-resumebucket4dbbd0e5-qcstjyegctqe- To start sending and receiving responses we can build our front end by following this script.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Resume AI</title>

<link

rel="stylesheet"

href="https://cdnjs.cloudflare.com/ajax/libs/bootstrap/5.3.2/css/bootstrap.min.css"

integrity="sha512-b2QcS5SsA8tZodcDtGRELiGv5SaKSk1vDHDaQRda0htPYWZ6046lr3kJ5bAAQdpV2mmA/4v0wQF9MyU6/pDIAg=="

crossorigin="anonymous"

/>

</head>

<body>

<div class="container">

<div class="row mt-2">

<div class="col-4 d-flex align-items-center">

<img

src="https://avatarfiles.alphacoders.com/125/125254.png"

alt="avatar"

class="w-100 rounded"

/>

</div>

<div class="col-8">

<div style="height: 100px">

<div

class="h-100 inline-block overflow-auto border border-secondary-subtle rounded p-2"

id="response"

></div>

</div>

<input

type="text"

name="question"

id="question"

class="form-control my-2"

placeholder="Enter your question here..."

/>

</div>

</div>

<h2 class="mt-2">Personal Information:</h2>

<ul>

<li>Name: SpongeBob SquarePants</li>

<li>Address: 124 Conch Street, Bikini Bottom</li>

<li>Phone: 555-555-5555</li>

<li>Email: spongebob@krustykrab.com</li>

</ul>

<h2>Objective:</h2>

<p>

Enthusiastic and hardworking sea sponge seeking a challenging and

rewarding position. Eager to apply my skills and positive attitude to

contribute to a dynamic work environment.

</p>

<h2>Education:</h2>

<p>Jellyfishing School Graduated with Honors</p>

<h2>Certifications:</h2>

<ul>

<li>AWS Cloud Practitioner Foundational</li>

<li>AWS Developer Associate</li>

<li>AWS Machine Learning Speciality</li>

</ul>

<h2>Work Experience:</h2>

<ul>

<li>

Krusty Krab - Bikini Bottom, Pacific Ocean Job Title: Fry Cook Dates:

1999 - Present Key responsibilities: - Mastering the art of Krabby

Patty flipping. - Ensuring a clean and organized kitchen. - Providing

excellent customer service with a smile.

</li>

<li>

Jellyfish Fields - Bikini Bottom, Pacific Ocean Job Title:

Jellyfishing Enthusiast Dates: Summers of 1999 - Present

</li>

</ul>

<h2>Responsibilities:</h2>

<ul>

<li>Expert in jellyfishing techniques.</li>

<li>Successfully captured and released jellyfish.</li>

<li>Shared jellyfishing knowledge with fellow enthusiasts.</li>

</ul>

<h2>Skills:</h2>

<ul>

<li>

Cooking: Proficient in preparing Krabby Patties and other Bikini

Bottom delicacies.

</li>

<li>

Optimism: Known for my positive attitude and ability to find joy in

any situation.

</li>

<li>

Teamwork: Collaborative team player, skilled at working with diverse

personalities.

</li>

<li>

Adaptability: Thrives in fast-paced and unpredictable environments.

</li>

<li>

Communication: Excellent communication skills, both with colleagues

and customers.

</li>

</ul>

<h2>Certifications:</h2>

<ul>

<li>Certified Krabby Patty Expert - Krusty Krab Training Program</li>

<li>Jellyfishing License - Jellyfishing School</li>

</ul>

<h2>Hobbies:</h2>

<ul>

<li>Jellyfishing</li>

<li>Karate</li>

<li>Playing the ukulele</li>

</ul>

<h2>References:</h2>

<ul>

<li>

Mr. Eugene Krabs

<p>Owner, Krusty Krab Contact: 555-1234, krabs@krustykrab.com</p>

</li>

<li>

Squidward Tentacles

<p>

Co-worker, Krusty Krab Contact: 555-5678, squidward@krustykrab.com

</p>

</li>

</ul>

</div>

<script>

const response = document.getElementById("response");

const question = document.getElementById("question");

function typeWriter(text, element, delay = 50) {

let i = 0;

const interval = setInterval(() => {

if (i < text.length) {

element.innerHTML += text.charAt(i);

i++;

element.scrollTop = element.scrollHeight;

} else {

clearInterval(interval);

}

}, delay);

}

question.addEventListener("focus", function () {

this.select();

});

question.addEventListener("keyup", (e) => {

if (e.key === "Enter") {

e.preventDefault();

response.innerHTML = "Thinking...";

fetch(

"https://gm7poxeeiqp3pc652e5repig640wuivw.lambda-url.us-east-1.on.aws/",

{

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ question: question.value }),

}

)

.then((res) => res.json())

.then((data) => {

console.log(data);

response.innerHTML = "";

typeWriter(data.response, response);

});

question.blur();

}

});

</script>

</body>

</html>

Our front end will look like this.

- Then we can start testing our RAG response for example by asking questions like

Tell me about yourself,What certifications do you own?or etc in the chat box. - It will return a response like this.

Finally, if we are done experimenting we can delete all our services by typing this command.

1

cdk destroy --forceIn this article, we learn how we can easily build our RAG solutions on AWS with Amazon Bedrock Knowledge Base and we also look into how we can utilize experimental AWS Generative AI CDK Constructs to build our generative AI services.

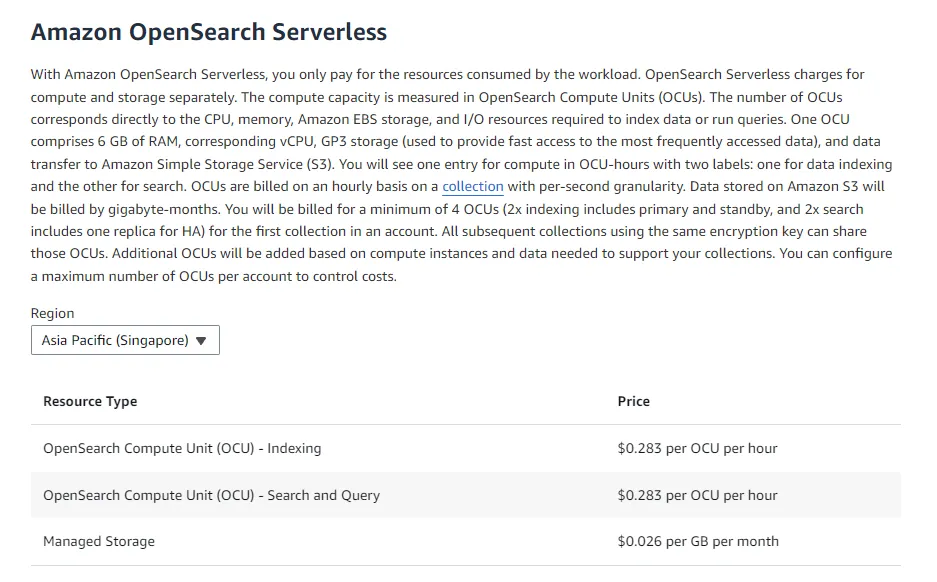

One thing to take note of is this CDK construct currently only supports Amazon OpenSearch Serverless. By default, this resource will create an OpenSearch Serverless vector collection and index for each Knowledge Base you create, with a minimum of 4 OCUs. But you can provide an existing collection and/or index to have more control.